My Role

System Architect & Lead Developer

Duration

3 Months (Ongoing)

Key Tech

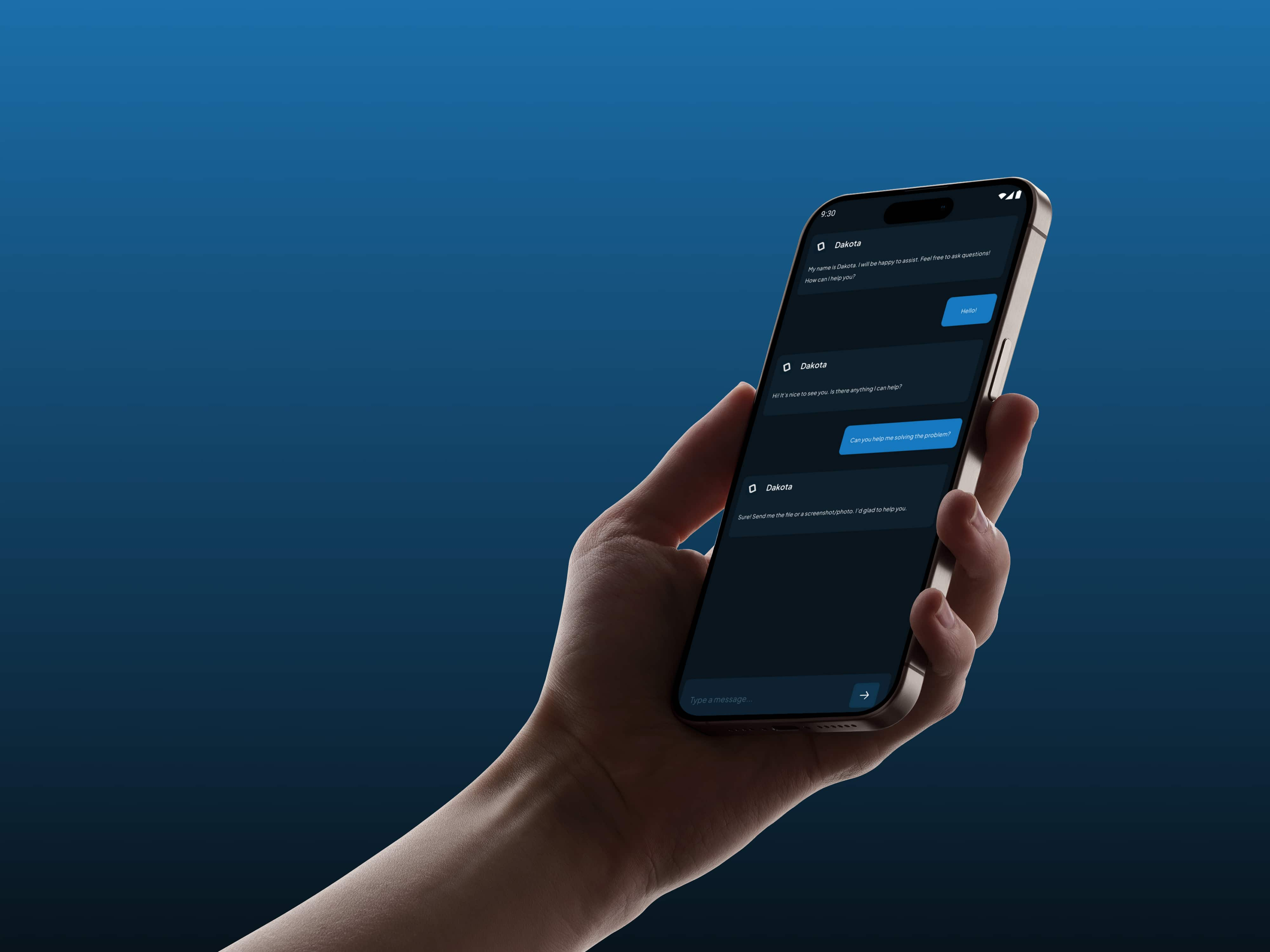

Project Overview

DAKOTA is an experimental AI assistant powered by the Dakota AI Response Layer (DARL 0.1). This layer is a highly customized micro-language model built using the nanoGPT framework and trained from a customized dataset specifically on a small, focused dataset of human-like greetings and social prompts. While still in its early phase, Dakota showcases how micro-models can deliver fast and lightweight responses.

The Challenge

The objective was to prove that Artificial Intelligence or AI functionality does not require massive computational resources. Large Language Models (LLMs) are often too slow and resource-intensive for simple conversational tasks. The core challenge was to validate the effectiveness of specialized AI by training a micro-language model optimized for resource efficiency. The goal was to ensure the model could deliver high-quality, instant social prompts without the heavy computational demands typical of larger LLMs.

The Solution

I engineered the Dakota AI Response Layer (DARL 0.1), a micro-LLM built using nanoGPT and trained on a minimal custom dataset. This solved the problem by creating DAKOTA AI, an experimental assistant that provides demonstrably fast and lightweight conversational output.